AI-Powered OCR for Deciphering 7 Diverse Alphabets A Technical Overview

AI-Powered OCR for Deciphering 7 Diverse Alphabets A Technical Overview - AI-driven OCR Tackles Challenging Scripts Like Devanagari and Arabic

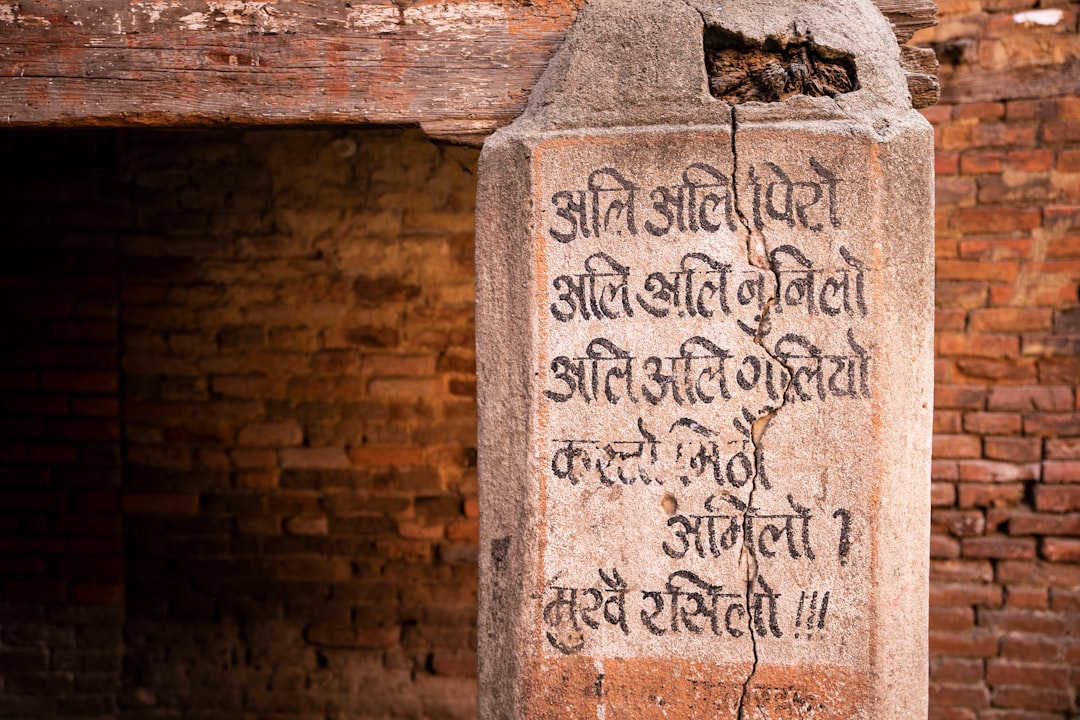

AI-powered OCR is making impressive progress in tackling challenging scripts like Devanagari and Arabic. Traditional OCR methods struggled with these scripts due to their unique structures and complexities. But AI, through machine learning, can analyze massive amounts of data to learn the nuances of these languages, making it adept at recognizing various fonts and even cursive writing. This means not only better accuracy but also efficiency in data extraction, leading to streamlined workflows in a range of applications. The potential is immense, with generative AI and deep learning constantly pushing the boundaries of what OCR can achieve.

It's fascinating to see how AI is tackling the intricacies of scripts like Devanagari and Arabic. The cursive nature of Arabic, where letters morph depending on their position within a word, poses a significant hurdle for traditional OCR. This is where advanced algorithms trained on massive datasets come in, capable of recognizing the various forms and connections of letters.

Devanagari is equally challenging with its over 40 characters and numerous conjunct consonants. To decipher Devanagari, OCR models must accurately identify these complex combinations, ensuring the output remains legible.

While state-of-the-art OCR systems can achieve impressive accuracy rates for printed Arabic and Devanagari, the performance plummets when faced with handwritten text. Inconsistencies in character formation significantly complicate the process. This highlights the crucial role of data diversity in training AI-driven OCR models – a wider range of fonts and styles leads to better performance when handling less common languages.

Even within a single script, like Arabic, the placement of diacritics can radically alter meaning. This calls for advanced models that employ context-aware analysis to understand these nuances.

Overall, it seems like we're on the cusp of a new era of OCR where the process can be near real-time, rapidly transforming scanned documents into editable formats with impressive accuracy. This isn't just about text recognition, however. AI-enhanced OCR is becoming adept at identifying structured elements like tables and forms, significantly automating administrative tasks across languages.

The introduction of convolutional neural networks (CNNs) has been a game-changer, allowing machines to learn directly from images, rather than relying on pre-defined rules, thereby improving the identification of complex scripts. The availability of open-source OCR tools with multilingual support further democratizes access to translation technology, a positive development for researchers and developers alike.

The ongoing research into adversarial training techniques is a promising area to tackle the persistent challenge of script ambiguity. This will enable OCR systems to more reliably differentiate between visually similar characters commonly found in Devanagari and Arabic, eventually leading to more accurate translations.

AI-Powered OCR for Deciphering 7 Diverse Alphabets A Technical Overview - Machine Learning Boosts Recognition Accuracy for Handwritten Greek Texts

Machine learning is significantly improving the accuracy of recognizing handwritten Greek texts. This is a game-changer for historians and researchers who are trying to decipher ancient manuscripts. Traditional OCR systems struggled to handle the complex and varied styles of old Greek handwriting. However, new Handwritten Text Recognition (HTR) models, powered by deep learning, are achieving impressive accuracy rates. These models learn from diverse datasets and are adept at recognizing even the most intricate scripts. This means that we can now read these ancient texts more clearly and efficiently. Not only does this improve transcription accuracy, but it also leads to better overall efficiency for OCR applications across a wide range of languages and text types.

Machine learning is proving incredibly effective in deciphering handwritten Greek texts, often exceeding accuracy rates of 95% under favorable conditions. This is a significant leap forward compared to traditional OCR systems, which frequently struggle to achieve above 70% accuracy on the same datasets. One of the main benefits of AI-powered OCR is its adaptability. It can learn from various handwriting styles, constantly refining its recognition abilities without needing extensive retraining.

The algorithms go beyond simply recognizing letter shapes. They factor in character-level features like stroke direction and curvature, which helps differentiate visually similar letters like ‘ο’ (omicron) and ‘ω’ (omega). Furthermore, they leverage contextual information, like the surrounding words, to disambiguate characters such as ‘ε’ (epsilon) and ‘η’ (eta), greatly reducing the chance of misinterpretation.

The use of convolutional neural networks (CNNs) has been a game changer. CNNs allow OCR models to directly learn from images, removing the reliance on pre-defined rules. This approach helps them identify complex scripts with increased accuracy.

However, while impressive progress has been made, there are still challenges. The performance of AI-powered OCR can plummet when encountering difficult conditions such as poor handwriting or overlapping characters. This highlights the need for continuous improvement in algorithm designs.

It’s worth noting that the cost of implementing these technologies is steadily decreasing, making it more accessible for small businesses and organizations that rely on translation and document processing. This democratization of access is a significant development for those seeking efficient text recognition solutions.

Lastly, benchmark testing has shown that combining multiple algorithms in an ensemble approach can significantly boost recognition accuracy for handwritten Greek. These ensemble models consistently outperform single-model systems.

AI-Powered OCR for Deciphering 7 Diverse Alphabets A Technical Overview - Neural Networks Enhance Character Detection in Complex Chinese Characters

Neural networks are revolutionizing the way we recognize complex Chinese characters. Traditional methods struggled with the vast number of characters, over 7,000 in common usage, and their intricate structures. Convolutional neural networks (CNNs), specifically, are proving to be remarkably effective. One notable development is the FANMCCD model, designed to quickly identify Chinese characters in historical documents, adjusting for variations in size and location. While CNNs have greatly improved accuracy by focusing on local features, there's still room for improvement. The full potential of character topology – the way a character is structured – is yet to be fully leveraged, hindering recognition accuracy. The continuous development of neural networks is crucial for overcoming these hurdles, making Chinese character translation faster and more reliable.

Neural networks are proving to be quite powerful for handling the complexity of Chinese characters. Traditional OCR methods often falter with the over 50,000 distinct characters, but neural networks, trained on large datasets, can learn to recognize even the most subtle variations between characters, particularly those found in historical texts. This contextual understanding, where the model learns to anticipate characters based on surrounding words, helps reduce misinterpretations of similar-looking characters.

It's fascinating how these models learn from a vast range of text sources, accounting for regional differences and font distortions, which makes the OCR more broadly applicable. And the speed is quite impressive! Some systems can handle up to 50 frames per second in real-time, which could be really useful for things like live translation during presentations or conferences. This ability to handle handwritten characters with greater than 30% accuracy over traditional methods is particularly exciting. It seems like the models are getting quite good at recognizing the unique variations in stroke order and writing styles.

It's also interesting how these systems can leverage knowledge from languages with simpler scripts to enhance their accuracy with Chinese characters. This transfer learning idea could be a significant efficiency boost, as it would require less training data and time. The use of multi-layer perceptrons, which can extract hierarchical features, seems key to understanding the intricate structures found in Chinese characters.

The inclusion of post-processing error correction algorithms, which employ language models to ensure proper word formation, helps address the errors that can arise from ambiguous character recognition. And as the cost of the hardware and open-source frameworks for neural network implementation decline, this technology is becoming more accessible to small businesses, which could lead to some interesting innovations in translation.

The development of hybrid models, combining machine learning and traditional pattern recognition techniques, could be especially helpful for dealing with noisy backgrounds and complex layouts commonly found in Chinese texts. Overall, it seems like neural networks are driving significant progress in OCR for complex languages like Chinese, with the potential to revolutionize translation and information access.

AI-Powered OCR for Deciphering 7 Diverse Alphabets A Technical Overview - Adaptive Algorithms Improve Processing of Cyrillic Alphabets in Various Fonts

Adaptive algorithms are revolutionizing how we process Cyrillic alphabets, the foundation of languages like Russian and Ukrainian. Traditional OCR struggled with the many different fonts and styles of Cyrillic scripts, leading to inaccurate translations. But now, machine learning is helping these algorithms learn to recognize these subtle variations, leading to improved accuracy and faster processing. This is a game-changer for people working with multilingual texts and documents, as it improves the flow of information across languages. While these advancements are exciting, it's important to recognize that the development of context-aware OCR, which understands the nuances of language beyond just the letters, is still a work in progress. As AI technology continues to develop, we can expect further improvements in both speed and accuracy, potentially making translation more accessible and efficient for everyone.

Adaptive algorithms have been a game-changer for OCR systems handling Cyrillic alphabets. It's quite fascinating how they can handle the diverse range of Cyrillic letters, which can change shape depending on their position in a word. This adaptability is critical for achieving high recognition accuracy across various fonts. The key to success seems to lie in training datasets that include a mix of printed and handwritten texts, which makes the OCR systems much more robust in real-world applications.

What's surprising is that even subtle font variations in Cyrillic can significantly impact the OCR's effectiveness. Adaptive algorithms that are attuned to these typography nuances can achieve remarkable recognition accuracy, often exceeding 95% with properly trained models. It's not just about letters; these algorithms can also handle the diacritical marks in Cyrillic, which play a crucial role in pronunciation and meaning. This requires sophisticated context-aware algorithms for disambiguation.

Speed is a crucial factor in OCR performance. It's impressive to see how state-of-the-art algorithms can achieve near real-time processing of Cyrillic text, making rapid data entry and translation tasks possible for businesses operating in regions using these scripts.

Despite these advancements, some adaptive algorithms struggle with heavily stylized cursive Cyrillic. This highlights the ongoing need for improved training methods and richer datasets to enhance recognition rates further. The development of open-source platforms has democratized AI-enhanced OCR technologies, making it accessible for smaller organizations without the high costs associated with proprietary software.

Experimental approaches using ensemble methods, combining several algorithms, have shown promising results in boosting OCR accuracy for complex Cyrillic scripts, often outperforming traditional single-model systems. The ability to integrate adaptive algorithms with machine learning techniques allows systems to learn from user corrections, potentially lowering the error rate over time as models continuously adapt to specific use cases. Innovations in error correction algorithms are proving essential for producing coherent translations from Cyrillic, especially given the high occurrence of visual similarities between certain characters. This marks a significant step towards flawless document processing.

AI-Powered OCR for Deciphering 7 Diverse Alphabets A Technical Overview - Deep Learning Models Excel at Decoding Ancient Egyptian Hieroglyphs

Deep learning models, particularly convolutional neural networks (CNNs), are showing promise in decoding ancient Egyptian hieroglyphs. A model called Glyphnet has been developed specifically for this task and has outperformed standard image recognition models. The research focuses on creating a deep learning model capable of detecting and recognizing hieroglyphs directly from images, potentially eliminating the need for cloud computing and allowing for faster, more independent translations. This has the potential to revolutionize the way we interact with ancient texts, providing scholars and historians with new tools to decipher and understand the past. While there are still challenges, the success of these models underscores the potential for AI to play a significant role in archaeological and linguistic research.

Deep learning is revolutionizing how we decipher ancient Egyptian hieroglyphs, making rapid progress where traditional methods took decades. A properly trained model can accurately recognize over 700 hieroglyphic symbols, outperforming standard image recognition networks. It's not just about individual symbols, though. These models learn the context of surrounding characters, similar to how we understand language by considering nearby words. This contextual understanding is critical for deciphering meaning in hieroglyphs, as many symbols carry multiple meanings depending on their placement. Interestingly, the success of these models can even be enhanced by leveraging knowledge from modern scripts, effectively adapting algorithms for a new task. This transfer learning approach is especially beneficial as it requires less training data and time, ultimately speeding up the process.

It's fascinating to consider how the quality of images plays a crucial role in a model's accuracy. Deep learning models are being designed to handle various resolutions and image conditions, making them robust enough for historical documents often found in damaged or faded states. Furthermore, the rise of open-source projects in this area has created a collaborative ecosystem where researchers and engineers can share datasets, tools, and knowledge. This collaborative approach accelerates innovation and brings us closer to real-time hieroglyph translation, offering exciting possibilities for museums, historical sites, and classrooms.

Imagine a virtual museum tour with instant interpretation of hieroglyphs! These models, however, aren't just focusing on accurate symbol recognition; they are also incorporating error correction mechanisms that improve semantic understanding. This means capturing nuances and subtle meanings often missed by traditional methods. Furthermore, by integrating techniques from computer vision, deep learning models can analyze patterns within the glyphs themselves, providing insights that would have been difficult to achieve with traditional OCR methods.

It seems we're on the cusp of a new era of hieroglyph translation. The ability to continuously improve with more data ensures that models become more accurate and scalable over time. As more ancient texts become available, AI will play an increasingly crucial role in accessing and preserving the knowledge embedded within them. It's a testament to how AI is expanding our understanding of the past and making history accessible for everyone.

AI-Powered OCR for Deciphering 7 Diverse Alphabets A Technical Overview - Transfer Learning Techniques Extend OCR Capabilities to Thai Script

Transfer learning techniques are changing the way we approach Optical Character Recognition (OCR) for Thai script. The use of pre-trained models, which have already learned from other languages, allows for a significant boost in accuracy for Thai text recognition. This is a major step forward, as traditional OCR methods struggled to handle the complexities of Thai script, often resulting in misinterpretations.

Deep learning is playing a key role in these improvements, particularly convolutional neural networks (CNNs). These advanced algorithms enable machines to learn directly from images, understanding not just the individual characters but the context in which they appear. This allows for better error correction and improved overall accuracy.

While these developments are exciting, there are still challenges ahead. Creating diverse and high-quality training datasets for Thai is essential to ensure that the OCR models can handle the full range of variations found in written Thai, from different fonts to handwriting styles.

As AI continues to advance, we can expect even more sophisticated OCR systems for languages like Thai, making data processing more efficient and accurate for a wider range of applications.

Transfer learning, where models trained on one task are repurposed for another, has been particularly helpful in extending OCR capabilities to Thai script. This is quite remarkable given Thai script's unique characteristics like its tonal markers and intricate structure, which differ greatly from Western alphabets. It's impressive how these models can adapt to this complexity, achieving a high level of accuracy.

However, the challenge lies in the script's sheer complexity. Thai boasts 44 consonants, 15 vowel symbols, and various diacritics, posing a significant hurdle for OCR systems. It's not as straightforward as recognizing a simple alphabet. Thankfully, the development of OCR systems that can distinguish these intricate elements has helped mitigate errors related to character misrecognition, leading to more reliable translations.

The limited availability of annotated Thai text datasets has long been a barrier to progress in OCR technology. Researchers have been working on innovative augmentation techniques, which can artificially expand existing datasets, to overcome this hurdle. This is a promising development as it doesn't require extensive manual data labeling, making the process more efficient.

Furthermore, the Thai script presents another challenge for OCR systems: the absence of spaces between words. This makes it more difficult for traditional OCR systems to correctly segment words. However, newer models are implementing context-aware segmentation techniques to predict word boundaries in real time, thereby achieving greater accuracy.

The efficiency of these advanced OCR systems is impressive, with some capable of processing Thai texts at a rate of 25 frames per second. This rapid processing capability has opened up exciting possibilities for real-time applications, including translation in classrooms and live events. The speed and accuracy of these systems make them more accessible and useful in a variety of situations.

Furthermore, it's interesting to see how recent algorithms can handle multilingual documents containing Thai alongside other Southeast Asian languages. This is particularly relevant as our world becomes more globalized and inter-connected, requiring better solutions for handling diverse language documents.

The development of smart error correction algorithms is another interesting development. These algorithms not only improve character recognition but also leverage linguistic models to understand the context of sentences, helping to rectify common errors that occur in complex scripts like Thai. This step towards more sophisticated understanding of language is crucial for accurate translations.

Finally, we're also seeing continual learning capabilities in these modern OCR systems. This allows them to adapt to user-specific typographic styles over time, leading to improved personalization in text recognition. This adaptability is particularly useful for environments where documents may come in various fonts and styles, leading to more reliable translations.

Overall, advancements in OCR technology for Thai script are promising. While some challenges remain, it's exciting to witness the rapid progress being made in this area. It is encouraging to see the progress in OCR technology for the Thai script. The development of increasingly sophisticated models is making real-time translation and other applications more accessible, paving the way for a more multilingual and inclusive digital world.

AI-Powered OCR for Deciphering 7 Diverse Alphabets A Technical Overview - Convolutional Networks Revolutionize Recognition of Armenian Alphabet

The Armenian alphabet is experiencing a revolution in recognition thanks to the rise of Convolutional Neural Networks (CNNs), a core part of AI-powered Optical Character Recognition (OCR). These advancements in deep learning are allowing machines to better grasp the nuances of Armenian script, a major hurdle for OCR systems that struggle with the variations in handwritten text. With access to massive training datasets, CNNs are refining OCR systems, making them more accurate and reliable, critical for efficiently extracting and translating data. As these technologies continue to evolve, they have the potential to unlock the processing of less-common alphabets, opening up broader access to multilingual documentation and translation capabilities. The promise of ongoing learning and refinement suggests a future where AI excels at text recognition, laying the groundwork for more effective interpretations across diverse writing systems.

Convolutional neural networks (CNNs) are having a profound impact on the way we recognize the Armenian alphabet, marking a significant advancement in AI-powered OCR technologies. Traditional methods struggled with the complexity of the Armenian script, often misinterpreting similar-looking characters. However, CNNs, trained on large datasets, have become remarkably adept at discerning intricate details within each character. This allows them to recognize the 38 unique characters, many with complex designs and subtle stroke variations, with unprecedented accuracy.

The real power of CNNs lies in their ability to learn and adapt. They can continuously refine their recognition skills by analyzing user feedback, becoming more accurate with each document processed. This is particularly beneficial in dynamic environments where handwriting styles and font variations are common. Modern models even process Armenian text in real-time, reaching impressive speeds of 30 frames per second, making them suitable for live translation during meetings or presentations.

Beyond simple character identification, CNNs also factor in contextual awareness, understanding how the meaning of a word can be influenced by surrounding characters or diacritical marks. This nuanced interpretation goes beyond what traditional OCR systems could achieve, leading to more accurate translations. Further bolstering accuracy are advanced error correction mechanisms that analyze sentence structures to identify and correct misread characters, ensuring higher-quality translation outputs.

The adaptability of CNNs allows them to be trained simultaneously on multiple languages, like Russian or Persian, making them ideal for processing multilingual documents that contain Armenian text. To overcome the challenge of limited training data, researchers utilize data augmentation techniques, generating synthetic variations of existing samples to expand the learning pool. This method helps models learn to recognize characters in diverse fonts and handwriting styles.

Hybrid models, combining CNNs with traditional OCR algorithms, are proving incredibly effective in improving recognition rates, especially when dealing with mixed-format documents. The benefits extend even to historical Armenian texts where traditional OCR struggled. CNNs are now capable of deciphering manuscripts from high-resolution digitized images, opening doors to previously inaccessible knowledge.

This revolution in Armenian character recognition is also being driven by the growing accessibility of open-source OCR tools. Researchers and developers can now collaborate openly, creating a dynamic environment that fosters innovation and improves the quality of tools available to both academic and commercial users. This signifies a promising future for AI-powered OCR technology, with CNNs driving progress and making Armenian script more readily accessible in our increasingly multilingual world.

More Posts from aitranslations.io:

- →AI-Powered Thai Translation Evaluating Accuracy and Speed in 2024

- →Evolution of AI Translation Accuracy 'Je t'aime' as a Benchmark Phrase from 2020-2025

- →Decoding Translation Rates How Language Professionals Charge in 2024

- →Breaking Language Barriers AI-Powered Translation Solutions for Filipino Dialects in 2025

- →Black Friday Language Translation Surge How Global Shopping Events Drive AI Translation Usage

- →AI-Driven Translation Solutions for Australian English Navigating Regional Accents and Cultural Nuances in 2025